In the flickering glow of vacuum tubes and the rhythmic hum of early calculating machines, a revolution was brewing. It wasn’t a revolution of armies or empires, but one of tiny, silicon-based miracles that would redefine the very fabric of human existence. This is the story of the semiconductor, the invisible heart of our modern world, and the relentless pursuit of shrinking its power onto ever-smaller chips.

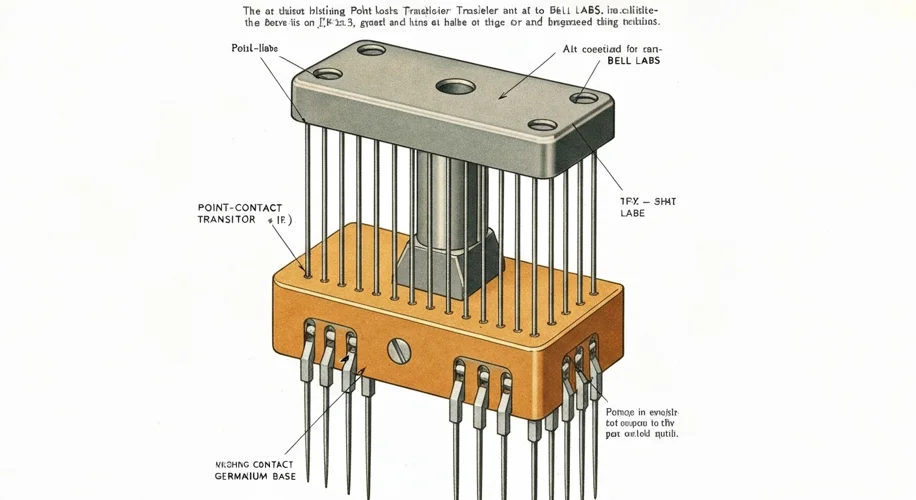

Our journey begins not with sleek, silver devices, but with a serendipitous discovery in a mundane setting. In 1947, at Bell Laboratories, a team of physicists – John Bardeen, Walter Brattain, and William Shockley – were wrestling with the limitations of bulky, power-hungry vacuum tubes. They sought a solid-state alternative, a material that could amplify or switch electronic signals. Their breakthrough came with the invention of the transistor, a device no bigger than a fingernail, capable of performing the same functions as a vacuum tube but with vastly less energy and heat. This was the seed from which the semiconductor industry would bloom.

Imagine the scene: a cramped laboratory, the air thick with the smell of ozone and dedication. The early transistors were fragile, prone to failure, and difficult to mass-produce. Yet, their potential was undeniable. The post-war era was a fertile ground for innovation, fueled by a desperate need for more compact and reliable electronics, particularly for military applications and the burgeoning field of telecommunications. The culture was one of intense scientific collaboration and competition, with minds like Bardeen, Brattain, and Shockley pushing the boundaries of what was thought possible.

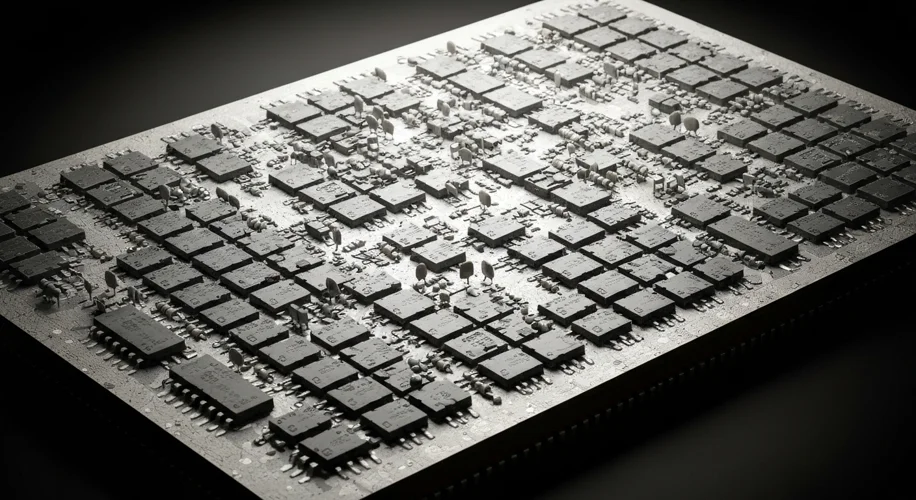

But the transistor, while revolutionary, was just the beginning. The next giant leap came with the concept of the integrated circuit, or IC. The brilliant, and often eccentric, Jack Kilby at Texas Instruments and Robert Noyce at Fairchild Semiconductor independently conceived of a way to put multiple transistors onto a single piece of semiconductor material – a silicon wafer. This was the birth of the microchip. In 1958, Kilby demonstrated his rudimentary IC, and by 1959, Noyce had patented a more practical version. The era of miniaturization had truly begun.

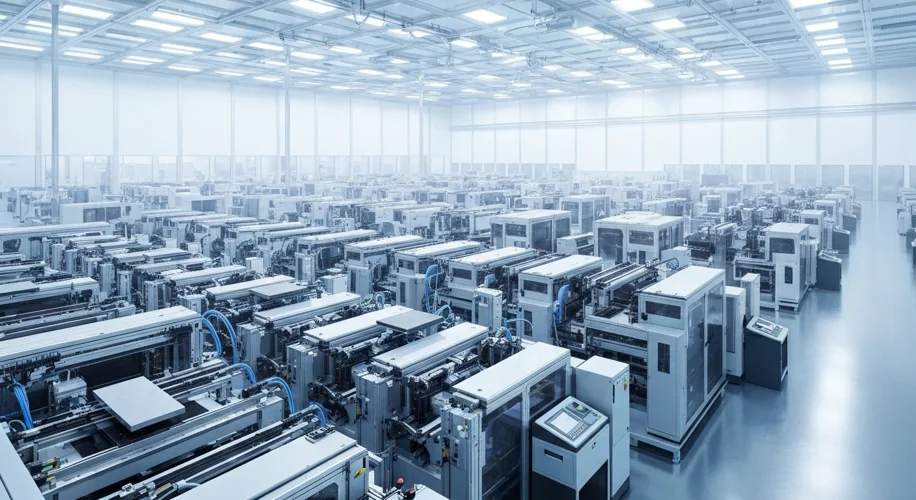

The 1960s and 70s saw a feverish pace of development. Companies like Intel, founded by Gordon Moore and Robert Noyce in 1968, became pioneers in this new frontier. Moore’s Law, an observation that the number of transistors on a microchip doubles approximately every two years, became the industry’s guiding star, driving relentless innovation and a relentless pursuit of smaller, faster, and cheaper chips. The process of manufacturing these intricate devices was itself a marvel of engineering. Photolithography, a technique borrowed from photography, became the key. Imagine a microscopic stencil, using light to etch incredibly complex patterns onto silicon wafers, layer by meticulous layer. The purity of the silicon, the precision of the etching, and the cleanliness of the manufacturing environment – known as a cleanroom – became paramount.

This era was characterized by fierce competition and a dynamic, often volatile, industry landscape. Companies rose and fell, fortunes were made and lost, and the geographical centers of innovation shifted. The United States, particularly Silicon Valley in California, became the undisputed heartland of semiconductor innovation. However, the industry’s complexity and the sheer scale of investment required for advanced manufacturing began to pave the way for new global players.

The late 20th century witnessed the rise of Asian manufacturing powerhouses, most notably Taiwan and South Korea. Companies like TSMC (Taiwan Semiconductor Manufacturing Company) and Samsung emerged, not just as manufacturers of chips designed elsewhere, but as innovators in the very process of chip fabrication. They mastered the art of high-volume, high-precision manufacturing, becoming indispensable to the global supply chain. This shift also introduced new geopolitical dimensions, as the concentration of advanced chip manufacturing in a few key regions highlighted potential vulnerabilities.

The impact of semiconductor technology is so pervasive that it’s almost invisible. From the smartphones in our pockets to the complex algorithms powering artificial intelligence, from the medical devices saving lives to the satellites exploring the cosmos, every aspect of modern life is underpinned by these tiny silicon wonders. The evolution of the industry has been a story of human ingenuity, relentless optimization, and a constant striving to push the boundaries of physics and engineering. The early breakthroughs, born out of a desire for better electronics, have cascaded into a technological revolution that continues to reshape our world at an astonishing pace.

Looking back, the history of semiconductor and chip manufacturing is not just a tale of technological advancement, but a testament to the power of scientific curiosity, collaborative spirit, and the relentless human drive to innovate. It’s a story that continues to unfold, promising even more astonishing developments in the decades to come.